14 UX Principles for Designing AI Products Users Actually Trust

Over the past few years, AI has gone from buzzword to backbone. It’s no longer confined to futuristic ideas, it’s embedded in how we search, shop, work, and connect. From auto-generated playlists to predictive text and fraud detection, AI is shaping everyday digital experiences, often without us even realizing it. But with this shift also comes a new responsibility: designing the way people interact with intelligent systems. Too often, the race to implement AI features focuses on what the technology can do, faster results, smarter predictions, more automation. What gets overlooked is what it should do for the people using it. That’s where UX comes in.

Priya Sreekumar

- 12 minutes Read

- 1 Jun 2025

The Human Responsibility in AI UX

Too often, the race to implement AI features focuses on what the technology can do, faster results, smarter predictions, more automation. What gets overlooked is what it should do for the people using it. That’s where UX comes in.

Designing for AI isn’t just about usability. It’s about building trust, reducing uncertainty, and creating clarity where there might otherwise be confusion. And unlike traditional interfaces, AI introduces ambiguity, automation, and decision-making that users might not fully understand, or even want.

We believe good AI design means making intelligence feel human, helpful, and honest. It’s not just a technical challenge. It’s a human one. And it’s up to us, as designers, to meet it with empathy, intention, and care.

How to Design AI Products

1. Identify a real user need

There’s a rush to add AI into digital products, especially with the rise of generative models. But often, these features end up feeling like tech demos rather than helpful tools. Why? Because they’re built around capabilities, not actual user problems.

Just because AI can do something doesn’t mean it should. If the same outcome can be achieved with a rule-based system, a simpler workflow, or better content, go with that. AI introduces complexity, both for teams and for users. It requires training data, tuning, ongoing evaluation, and trust-building. That’s a high bar for something that might not be essential.

A good place to start is by asking:

- What user problem are we solving?

- Is AI the best way to solve it?

- Will it help the user move forward faster or more confidently?

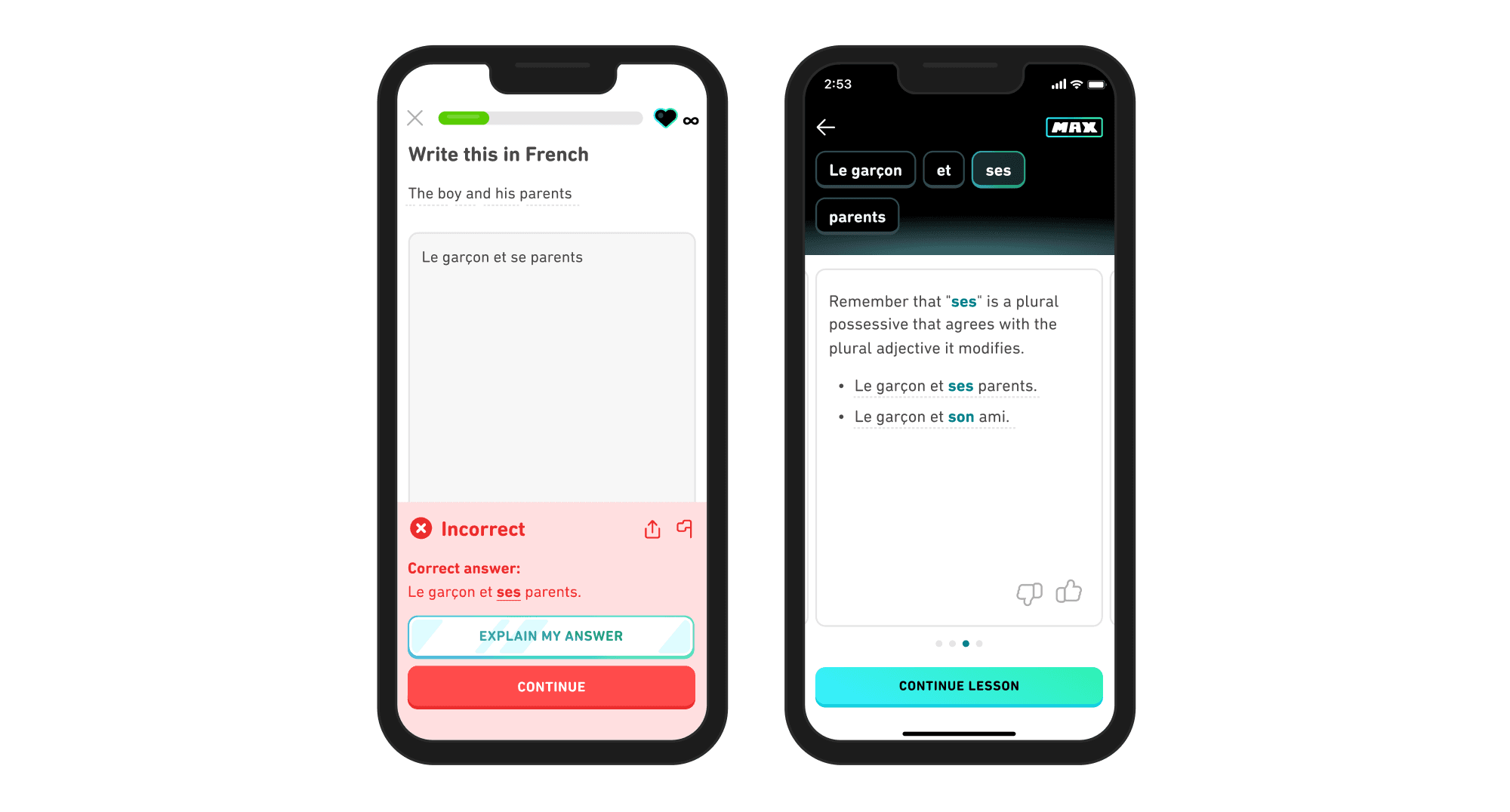

Image Source: Duolingo

By transforming moments of confusion into learning opportunities, it enhances the overall educational experience.

AI should enhance momentum, not create distractions. The goal isn’t to add intelligence, it’s to add value.

2. Make the AI's reasoning visible

AI often works behind the scenes, flagging issues, surfacing suggestions, making decisions. But when users can’t see the why behind those actions, trust quickly erodes. It’s one thing to get a recommendation; it’s another to understand why it showed up.

This is where transparency plays a critical role.

People don’t expect AI to be perfect, but they do want to feel informed. Showing the reasoning, even in simple terms can make the system feel more reliable and less mysterious. For example:

- “Recommended based on your recent purchases”

- “Flagged as unusual due to a new login location”

- “Suggested because similar users found this helpful”

These explanations don’t need to be deeply technical. What matters is that they’re clear, honest, and offered at the right time.

On the other hand, if users are left guessing, they may assume the worst: bias, randomness, or error. Over time, that uncertainty adds friction, and drives people away.

Transparency isn’t just a nice-to-have. It’s a design strategy for building confidence and giving users a sense of control. And in AI-driven experiences, that’s what makes the difference between feeling helped and feeling handled.

3. Keep humans in control

AI can be incredibly helpful but only when it feels like a partner, not a boss. When users lose the sense of control, even smart automation starts to feel frustrating or intrusive.

We’ve all seen it:

- An AI assistant books a meeting you didn’t approve.

- A predictive feature finishes your sentence incorrectly.

- A security system locks you out because of a false positive.

These aren’t just glitches. They’re moments where the system overstepped.

To design respectful AI, we need to keep users in the driver’s seat. That means:

- Offering choices instead of enforcing outcomes

- Letting users accept, reject, or modify AI suggestions

- Providing clear ways to pause, disable, or opt out of features

Control doesn’t limit the power of AI, it unlocks it. When users feel they can say “yes,” “no,” or “not now,” they’re more likely to engage confidently.

The best AI experiences are collaborative. They support the user’s intent, rather than override it. Because in the end, the smartest system still needs human permission to matter.

4. Design for when AI gets it wrong

Every AI system makes mistakes. It’s not a failure of the technology, it’s part of how it works. But how we design for those moments makes all the difference.

When users encounter an error, their experience hinges less on what went wrong and more on how the system responds. Silence or deflection leads to frustration. Clarity and accountability build trust.

Here’s what helps:

- A clear, human-friendly error message

- A next step: retry, undo, or fix manually

- An easy way to give feedback or flag the issue

Users are surprisingly forgiving when a product admits its limits. What they want is acknowledgment, not perfection.

The key is to treat these moments as touchpoints, not breakdowns. A thoughtful error flow can turn a frustrating experience into a moment of connection. And when handled with transparency and humility, even failure can deepen loyalty.

5. Use context carefully and respectfully

AI works best when it understands context: who the user is, what they’re doing, and when they’re doing it. With the right timing, even a small suggestion can feel like magic. But without context or with too much of it, it can quickly become annoying or even intrusive.

The line between helpful and creepy is thinner than it seems.

This is why designing with context isn’t just about recognition it’s about restraint. The goal isn’t for AI to act more often, but to act more appropriately.

To get it right:

- Use smart defaults that match the situation

- Let users customize or opt out easily

- Avoid assumptions while asking when you’re not sure

The user should always have the final say. Context-aware AI should feel like a quiet helper in the background, not a system making decisions uninvited.

Handled with care, context isn’t about tracking. It’s about timing. And when you get it right, it creates moments that feel intuitive, respectful, and genuinely useful.

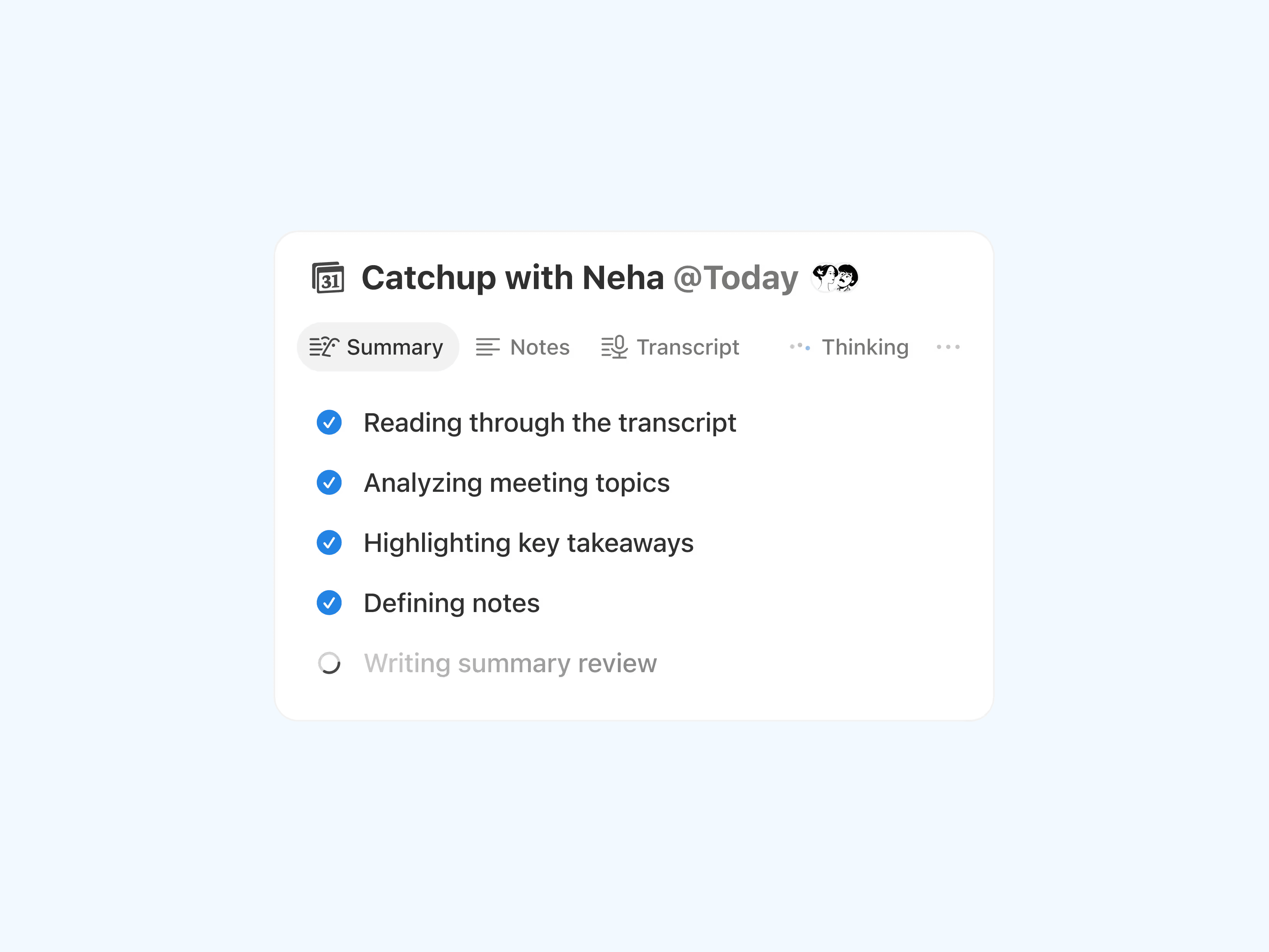

Take Notion AI, for example. When you begin writing or organizing a document, Notion’s AI assistant offers context-aware suggestions, like summarizing a long meeting note, generating a to-do list from your text, or drafting action items based on recent updates. These suggestions surface when they’re most relevant, and they’re never forced. The user always has control to accept, edit, or ignore them.

That’s the difference between a system that intrudes, and one that truly assists.

Image Source: Notion

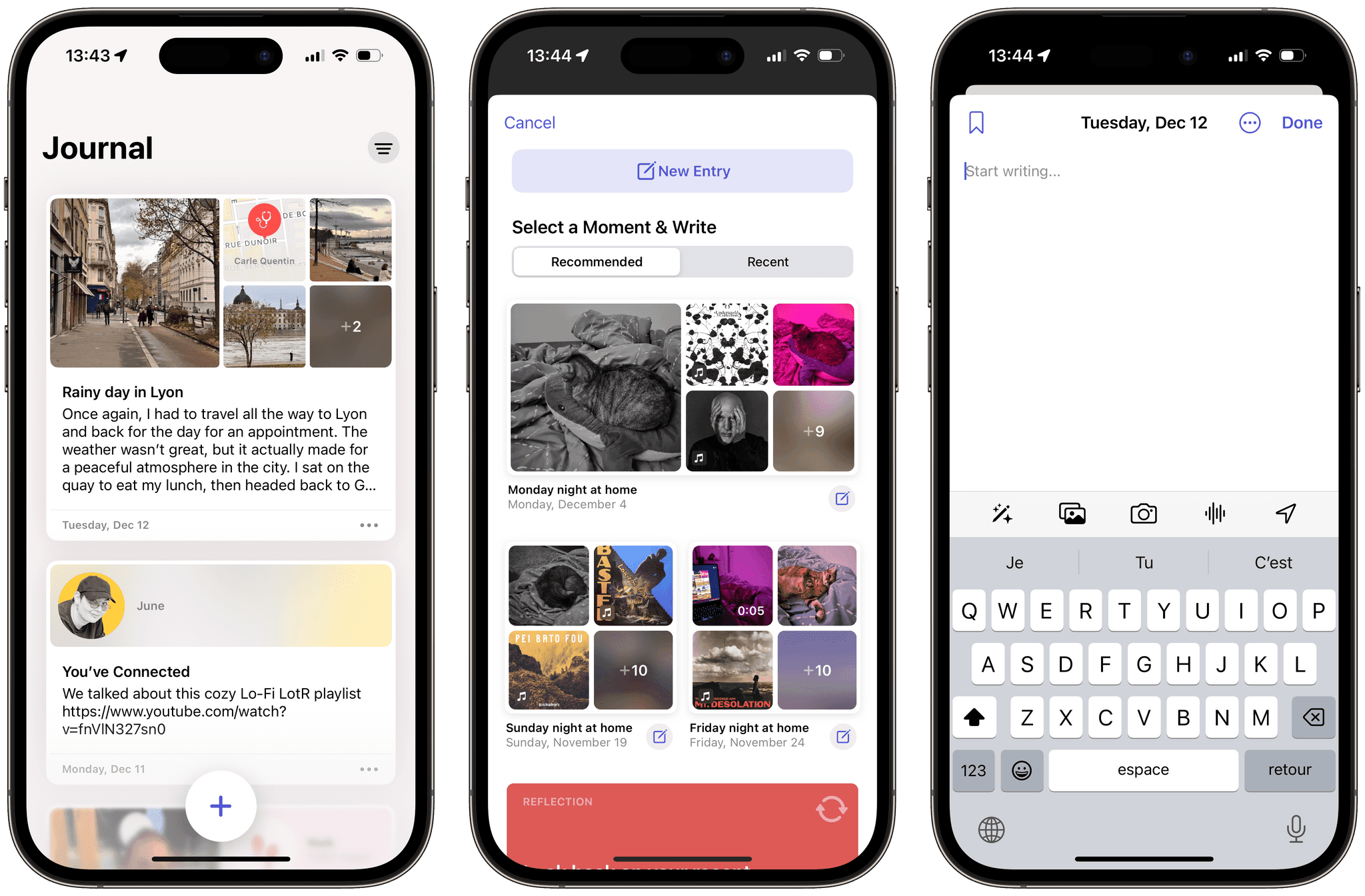

Another example is Apple’s Journal app. It uses rich contextual signals, like your location, current weather, activity data, and time of day to offer meaningful journaling suggestions. For instance, after a morning workout at the park on a sunny day, the app might prompt you to reflect on how you felt during the activity. It surfaces relevant entry ideas that feel natural and timely, not random or generic by combining environmental and personal context.

Image source: MacStories

6. Bringing in emotional intelligence

As AI becomes part of everyday interactions, emotional design is no longer optional, it’s essential. People don’t just respond to what a system does. They respond to how it feels in the moment.

This isn’t about adding emojis or soft colors. It’s about understanding emotional context:

- A chatbot that pauses before responding

- A suggestion that waits for the right moment

- A message that gently acknowledges user frustration

These small choices build trust. They show that the system isn’t just smart it’s considerate.

We often aim for frictionless experiences, but not all friction is bad. Sometimes, slowing down a moment gives users space to reflect, or signals that the system understands the emotional weight of a situation.

Emotionally intelligent design is about balance: knowing when to step in, and when to step back. And in AI-powered products, that sensitivity can be the difference between feeling seen and feeling dismissed.

7. Let your values guide the design

AI products aren’t neutral. Every decision what the system does, what data it uses, who it serves reflects underlying values. Whether intentional or not, design choices shape who benefits and who gets left out.

That’s why ethics can’t be something we address later. They need to be built into the product from day one.

As you integrate AI into your experience, ask the harder questions:

- Who might this unintentionally harm?

- Whose voices are being prioritized or excluded?

- Are we solving a real need, or just moving faster?

Responsible AI design means working with diverse users, testing for bias early, and being transparent about trade-offs. It also means recognizing that fairness and usability aren’t separate goals, they’re deeply connected.

The most trusted AI products are the ones that reflect human values clearly. Not just in what they say but in what they choose to do.

8. Let AI and Your UX Evolve

AI doesn’t stand still and neither should the design around it. The best AI products aren’t finished at launch. They grow, adapt, and improve based on how real people use them.

That means creating systems that listen:

- Feedback loops that capture what works (and what doesn’t)

- Iterative updates based on real-world behavior

- Transparent changelogs that show progress, not just polish

These aren’t just internal tools. They’re visible signals to your users that the system is evolving and that their experience matters.

Take GitHub Copilot, for example. It doesn’t try to get everything right on day one. Instead, it learns from how developers interact with it, adjusting its suggestions over time. The product gets better because it keeps listening.

When designing AI features, treat improvement as part of the experience. Don’t aim for a perfect first impression. Aim for a product that’s responsive, self-aware, and always learning.

9. Break AI into smaller, focused tasks

AI doesn’t need to solve everything at once to be valuable. In fact, trying to do too much can hurt the experience making the system harder to design, harder to trust, and harder for users to grasp.

The key is to scope AI into small, purposeful tasks that support real user needs. Think less about replacing workflows and more about improving specific moments:

- Summarize a conversation thread

- Suggest a reply based on recent context

- Flag a possible error or inconsistency

Smaller scopes make the value clearer. They also make AI easier to test, explain, and refine over time.

Take Microsoft Word with Copilot, for example. Rather than attempting to rewrite full documents automatically, Copilot focuses on specific, focused tasks like summarizing a document, rewriting a selected paragraph in a different tone, or drafting a section based on a few bullet points. These are small but impactful interactions that let users stay in control while benefiting from intelligent support.

When AI supports tasks rather than replaces entire flows, users stay in control and confidence grows one useful interaction at a time.

10. Set clear expectations from the start

AI can feel powerful and open-ended but that makes it even more important to set clear boundaries. Users need to know what the system can do, what it can’t do, and what kind of results they should expect.

When expectations are vague or overpromised, frustration follows. Worse, it erodes trust. People may assume the system is broken, unreliable, or simply not for them.

Clear UX helps prevent this. A few simple tools go a long way:

- Onboarding that introduces capabilities and limits

- Microcopy that explains feature behavior

- Hints or warnings that guide usage in real time

Even a small statement like ChatGPT’s “May occasionally produce incorrect information” can frame the entire user experience. It signals transparency, sets a realistic tone, and reduces the risk of overreliance.

Importantly, setting expectations isn’t just about what the AI can do. It’s also about being honest about what it shouldn’t do. If the system isn’t designed to make decisions, say so. If it's still improving, let users know.

In a space where hype is high and trust is fragile, the most helpful thing a product can do is be upfront. Clear expectations don’t limit AI they unlock more confident, more sustainable engagement.

11. Make experimentation safe and stress-free

Trying out AI features can feel risky. Users often wonder: What happens if I mess this up? Will it change something I didn’t mean to? Can I undo it? That hesitation can stop people from engaging at all. That’s why psychological safety isn’t just a nice-to-have it’s a core part of good AI UX.

Users need to feel that it’s okay to try, fail, and explore without causing permanent damage. The more forgiving the interface, the more confident people become. And confidence leads to curiosity.

Here’s how to make that happen:

- Use non-destructive actions: preview modes, soft saves, and undo options

- Clearly label AI-generated content so users know what’s editable and optional

- Offer visual cues like how Google Docs shows AI-suggested text in gray, waiting for user approval

It also helps to make experimentation available in low-pressure moments. Introduce features gradually, in places where users already feel in control. That way, they can explore capabilities without feeling overwhelmed.

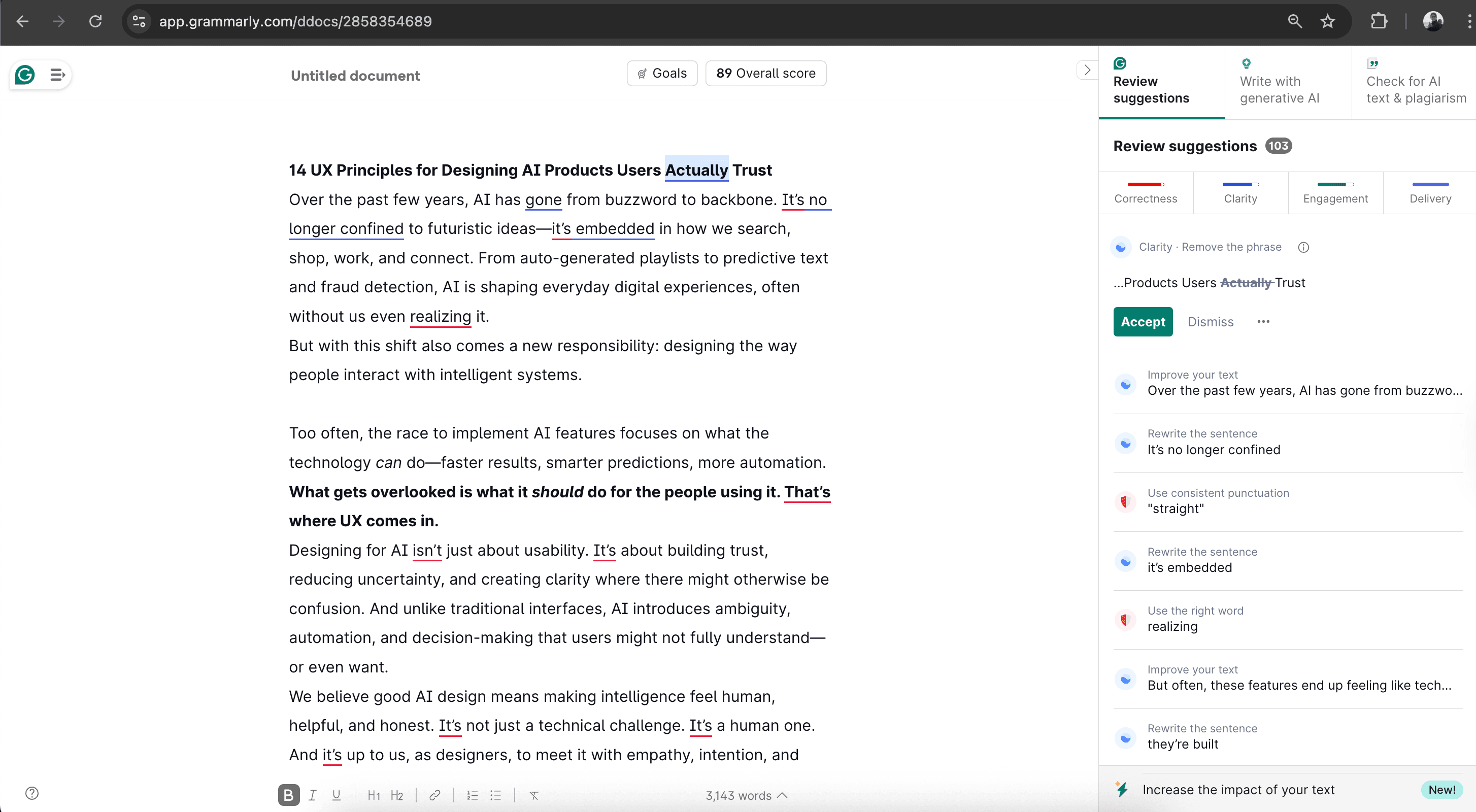

Take Grammarly, for example. Its AI suggestions appear as underlined text, paired with brief explanations and clear options to accept, ignore, or dismiss. No edits are made until the user gives consent. This makes experimentation feel safe users can test suggestions without any fear of making permanent changes. It encourages engagement by putting control firmly in the user’s hands.

Users are more likely to play when they know they won’t break anything. And when they play, they learn. That’s how trust in your AI features is built not through perfection, but through permission.

12. Make AI features discoverable without being disruptive

One of the biggest challenges with AI-powered features? Users often don’t know they’re there.

Unlike traditional tools, AI tends to run quietly in the background summarizing, predicting, suggesting without making a big entrance. And when features aren’t visible or clearly explained, they’re likely to go unused, no matter how powerful they are.

This is why discoverability matters. Not just once, during onboarding but repeatedly, in-context, and without being annoying.

Designers should think about:

- Where in the workflow an AI feature naturally fits

- How to introduce it without breaking flow (tooltips, nudges, gentle animations)

- When to resurface it to reinforce the value

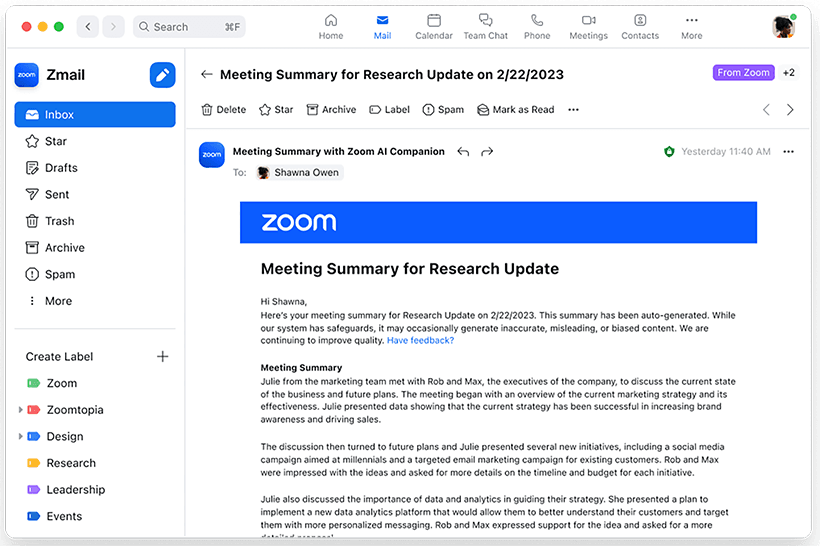

Zoom does this well. Features like message summaries or AI-generated meeting recaps are shared right when you need them subtly, via email, after a meeting, with action items for all participants.

Image source: Zoom

The goal is to make discovery feel like a natural part of the experience. Not loud. Not flashy. Just helpful, timely, and repeatable. If AI is going to support the user, the user has to know it’s there and feel invited, not instructed, to try it.

13. Design for AI uncertainty don’t hide it

AI doesn’t always give definite answers and that’s normal. Most AI systems work on probability, not certainty. But when users aren’t told that upfront, varying results can feel confusing or even broken.

That’s where good UX comes in: not to hide the uncertainty, but to communicate it clearly and respectfully.

It helps to show confidence levels in ways that feel human:

- “Looks like a cat” is easier to process than “87% confidence this is a cat”

- Soft language like “You might find this helpful” signals flexibility

- Visual cues, like faded suggestions or “try again” states, reinforce that nothing is final

Importantly, you also need to show users what happens if the AI isn’t sure. Include fallback options, allow for manual input, or offer next steps when results are unclear.

Framing outputs as suggestions rather than truths helps people feel empowered, not second-guessed. When users understand that uncertainty is part of how AI works and see that it’s been handled thoughtfully they’re more likely to trust the system, even when it’s not 100% right.

14. Turn feedback into a feature

AI systems don’t stand still, and they shouldn’t learn in isolation. Some of the most valuable insights come directly from users interacting with the product in real scenarios. That’s why collecting feedback isn’t just helpful it’s essential.

Make it easy for users to react to AI outputs:

- Simple options like 👍/👎, star ratings, or “Was this helpful?” prompts

- Flags for incorrect or inappropriate responses

- Open fields for quick suggestions or corrections

But don’t stop there. Close the loop and let users know their input matters:

- Acknowledge feedback with thank-you messages

- Show visible improvements over time

- Highlight community-driven changes or feature refinements

When feedback feels like it disappears into a void, people stop offering it. But when users see that their voice helps shape the product, it builds trust and a stronger connection to the system.

In an AI-driven product, feedback is also a signal that the system is alive, learning, and improving with its users, not just for them.

Want to design AI that earns trust?

AI changes what’s possible. But UX defines what’s meaningful.

At Crazydes, we believe designing for AI isn’t just a technical challenge it’s a human one. It calls for curiosity, empathy, and the courage to ask better questions. We’re not here to chase trends or tame algorithms. We’re here to ensure the systems we build serve the people they touch with clarity, dignity, and care.

If you are working on an AI product and want it to feel more like a partner than a machine, let’s talk.

Because the best technology isn’t just powerful. It’s thoughtful.